2014 was a sparse year for blogging and other projects. But at least some progress was made on my CPU project, including a major milestone: namely, running its first compiled program.

Stored-program computer

Firstly, the CPU is now capable of loading a program into instruction memory and then running it. Before now, the program had to be “hardcoded”; a situation not unlike that of early computers, which needed to be rewired to perform a different computation. The stored-program computer, which runs a program from memory, is a big thing in the history of computers.

Of course, one could simply have hardcoded a virtual machine program, and thenceforth have that VM run programs stored in data memory. (A primitive virtual machine is not too hard to write in assembly language.) But that would be ridiculous: an FPGA running a circuit that implements an instruction set in which a program is written that simulates a computer than runs programs in data memory.

At this point, the CPU had almost caught up with technology from 1948.

DEPP protocol

The main challenge for loading programs was IO. The FPGA has very simple IO devices. It looked like programs would need to be entered by setting the 8 switches to an 8-bit byte value, then pressing a button to enter that byte into memory. Repeat for the next 4095 bytes of the program. This option is do-able. More preferable is communication over a cable from another computer. Ethernet is one possible option, but implementing that, and then hardcoding a TCP/IP stack, might be a lifetime of work (and leave little room on the FPGA for a CPU and memory).

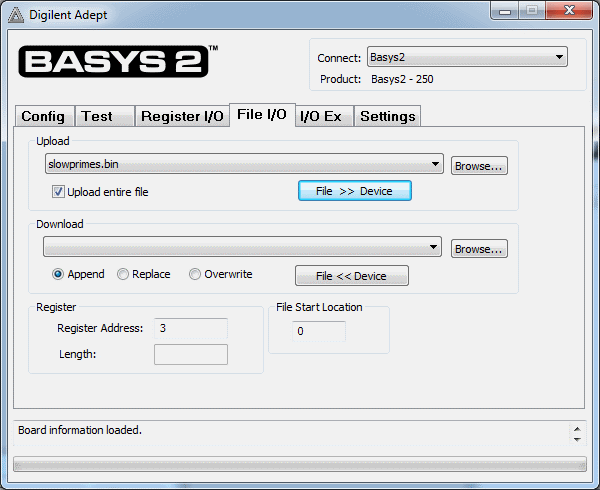

Another possibility is the USB cable connected to the host which powers the FPGA and uploads the circuit bitfile. The Digilent board provides something called DEPP (based on EPP, which is used for parallel ports). Several IO pins from the FPGA are assigned to DEPP, and software running on the host can send and retrieve data, if those pins have been connected to the appropriate circuitry. Implementing the DEPP circuitry was surprisingly easy with the help of online resources such as Hamsterworks (though I did have to learn VHDL to figure out what what going on there).

It felt good to see that first successful byte arrive from the host on the FPGA. Then another byte, and another. When I sent a test file of 100 bytes… 83 bytes arrived at the FPGA. Several days were spent rewriting the circuit, and copying and testing others’ circuits. Similar but different problems would occur with other people’s code: too many bytes, or bytes out of order, or junk bytes. I concluded there was inherent noise in the channel, and had resigned myself to designing an error-correction protocol, when I found out about metastability. Buffering two clock pins controlled by the host solved the problem (the problem being that while those pins were stabilising, they would be wavering up and down and each change would be interpreted as a data event).

The CPU can now be operated as follows:

- The FPGA is switched on and the circuit bitfile uploaded.

- The CPU is in “load” state. (This is displayed on the seven-segment disaply as

LoAd— I have the beginnings of a 6-bit character set and really bad SSD font — you try making Ms and Ws out of the seven segments!) - The host sets the DEPP address to 3 (designated as “upload instruction byte”), and sends bytes of program data.

- The host writes 1 (“reset”) to DEPP address 0 (“state”).

- The CPU performs a reset and begins executing the program from memory.

- Later, the host sets the CPU back to “load” (byte 0 to address 0), sets the instruction load address to 0 (byte 0 to address 2), and sends a new program before again setting the CPU state to “reset”.

I have seen consoles from some computers from around 1960, and I believe they worked like this.

Data memory and IO devices

Late in the year I finally figured out data memory, using Xilinx block RAM primitives. I had earlier spent a lot of time puzzling over it, wondering why words sometimes could not be read out after being written. I gave up and put it aside for a few months. On resuming the work I found my problems were caused by a bug in instruction decoding, where the register numbers for value and address were not taken from the proper parts of the instruction. This a classic example of the hazard of implementing functionality before it can be tested. The code in question is simple, and I wrote it envisioning how data memory would work, but without it being run and the data memory verified as working, a simple error in the code was not picked up until much later.

The CPU’s IO system was also refactored. The FPGAs switches, buttons and LEDs can be controlled using the IN and OUT instructions. The SSD also has a few new modes. It can display a word (or two) of data as decimal, hexadecimal, or alphanumeric. As mentioned above, there is a simple character set (where the digits and alphabet occupy the first 36 character positions — this is very convenient for performing base conversion, e.g. when displaying a value in hexadecimal).

One unresolved issue in the CPU design is the relationship between CPU ports (which a program can use to control th IO devices), and the control ports, such as the CPU state and the instruction memory upload ports. I have an urge to unify them, partly to make the assignments of ports easier to remember, but mostly to enable the host to control IO, and also to allow the CPU to control its own internal state — e.g. a program could alter instruction memory or reboot the CPU by writing to the appropriate ports. The only real hurdle is that DEPP is based on bytes, but the CPU (and its IN/OUT instructions) work with 16-bit words. Always writing byte-pairs over DEPP would be annoying, as would restricting IO addresses and values to 8 bits.

First compiled program

And finally, I have made some progress on the accompanying compiler. A few weeks ago I was able to take this test program:

//#include <stdio.h>

void main() {

int max;

max = 10000;

int candidate;

candidate = 2;

while (candidate < max) {

int divisor;

divisor = 2;

while (divisor < candidate) {

int multiple;

multiple = 0;

while (multiple < candidate) {

multiple = multiple + divisor;

}

if (multiple == candidate) {

break;

}

divisor = divisor + 1;

}

if (candidate == divisor) {

__out__(candidate, 18);

//printf("%d\n", candidate);

}

candidate = candidate + 1;

}

}

And compile it:

$ ./compiler.py slowprimes.e > slowprimes.asm

$ cat slowprimes.asm

main::

mov 10000, $r8

mov 2, $r9

L25:

slt $r9, $r8, $r7

br $r7, L7

jmp main$exit

L7:

mov 2, $r5

L31:

slt $r5, $r9, $r3

br $r3, L11

L28:

sub $r9, $r5, $r1

br $r1, L26

L27:

mov 18, $r4

out $r9, $r4

L26:

mov 1, $r2

add $r9, $r2, $r9

jmp L25

L11:

mov 0, $r11

L32:

slt $r11, $r9, $r10

br $r10, L15

L29:

sub $r11, $r9, $r12

br $r12, L30

jmp L28

L15:

add $r11, $r5, $r11

jmp L32

L30:

mov 1, $r6

add $r5, $r6, $r5

jmp L31

main$exit::

And assemble it:

$ ./asm.py slowprimes.asm -b > slowprimes.bin

And upload it to instruction memory:

And run it:

Of course, it did not actually run first time. This was not due to a bug in the compiler, or the assembler, or the CPU. It was due to a bug in the test program. (As you can see, there is now a commented-out call to C’s printf — I could have treated it as C and compiled it gcc and had it correct long before implementing the compiler!)

It did run second time, though. This was very satisfying.